The National Association of Broadcasters held their annual convention in Las Vegas this week. The NAB Show attracted 65K attendees and 1300 exhibitors from 41 countries showing off their products in an exhibition that spans four halls of the Las Vegas Convention Center. The North Hall is closed for construction right now, as part of an ongoing major remodel of the entire complex.

So West Hall is way off by itself now, further increasing the utility of the Loop in getting there and back, via a fleet of Tesla cars driving in narrow tunnels deep under the complex. They are adding new stops to the system, heading farther West and South, but I find it hard to believe that the solution will scale well, as the main station appears to be at its peak capacity with only 3 possible destinations.

“SMPTE 2110” is competing with “A.I.” as the most popular buzzword of this year’s show. In previous years it was 3D, 4K, HDR, IP Video, NDI, or other things, but this year SMPTE 2110 is finally emerging as the broadest supported approach to IP video. IP Video is the process of moving real-time video over standard internet networking infrastructure. 2110 competes with NDI, Dante, IPMX, and other proprietary approaches that have been marketed with varying degrees of success over the last few years. The 2110 set of standards still have a number of possible variations in regards to supported codecs, from uncompressed to J2K and JPEG-XS, among others, and it isn’t always clear which variations are supported by new products. But that is clearly the direction that IP video is headed, especially for cloud based solutions. NDI isn’t going to disappear on the low end, but I expect 2110 to dominate the high-end market, initially with uncompressed implementations, hopefully followed by efficient compression solutions like JPEG-XS as those products mature, and the aggregate bandwidths begin to pile up. There were new 2110 products all over the show, some of which I will explore here.

As always, Blackmagic Design has a wide variety of new products on display across their product lines, and their booth dominates the entrance to South Hall. The primary featured product is a new 12K Camera with a full-frame sensor, called the Ursa Cine 12K Camera, along with its supporting accessories. Alongside this, is a new Pyxis 6K camera, which also has a full frame sensor, but a quarter of the total pixel count. They also have minor updates across the rest of their studio and broadcast camera products.

As always, Blackmagic Design has a wide variety of new products on display across their product lines, and their booth dominates the entrance to South Hall. The primary featured product is a new 12K Camera with a full-frame sensor, called the Ursa Cine 12K Camera, along with its supporting accessories. Alongside this, is a new Pyxis 6K camera, which also has a full frame sensor, but a quarter of the total pixel count. They also have minor updates across the rest of their studio and broadcast camera products.

On the software front, Resolve is now on version 19, with added support for media live play-out and instant replays. Fusion is now on version 18, and Fusion Connect integrates the application with Avid Media Composer. These new software updates dovetail with a number of new hardware interfaces, including a Micro Resolve Color Panel that works with iPad or computers, a Replay Edit controller for the human interface, and a new video I/O interface in the Media Player 10G. The Media Player 10G is an extension of the previous UltraStudio products, in that it is a Thunderbolt attached SDI and HDMI interface, with multiple streams, but they have added a 10G Ethernet port. This port does not support SMPTE 2110 input or output, which seems like a huge missed opportunity, and apparently there are currently no other ways to get UHD 2110 directly out of Resolve. I imagine we will see a software solution for that in the not too distant future. (Ideally one that works with any 10GbE or better connection, not just a dedicated card.) The new Media Player 10G also has power over Thunderbolt to basically make it a laptop docking station, and an RS422 port, to support using Resolve as an automated playback server.

On the software front, Resolve is now on version 19, with added support for media live play-out and instant replays. Fusion is now on version 18, and Fusion Connect integrates the application with Avid Media Composer. These new software updates dovetail with a number of new hardware interfaces, including a Micro Resolve Color Panel that works with iPad or computers, a Replay Edit controller for the human interface, and a new video I/O interface in the Media Player 10G. The Media Player 10G is an extension of the previous UltraStudio products, in that it is a Thunderbolt attached SDI and HDMI interface, with multiple streams, but they have added a 10G Ethernet port. This port does not support SMPTE 2110 input or output, which seems like a huge missed opportunity, and apparently there are currently no other ways to get UHD 2110 directly out of Resolve. I imagine we will see a software solution for that in the not too distant future. (Ideally one that works with any 10GbE or better connection, not just a dedicated card.) The new Media Player 10G also has power over Thunderbolt to basically make it a laptop docking station, and an RS422 port, to support using Resolve as an automated playback server.

Where Blackmagic Design is leaning into SMPTE 2110 is with their mini convertors and a new network switch. The new mini-convertors are pretty straight forward in that they convert 2110 packets over 10GbE ports to and from SDI or HDMI. The 360P 16 port 10GbE network switch is a bit more interesting to me, in that any switch ‘should’ work for 2110, but this new one also includes a button interface on the other side to operate it like an SDI router. My understanding is that this is a totally separate device within the 1U box, which routes the multicast subscriptions via NMOS. NMOS is something I had heard of, but I have still not wrapped my head around exactly how it works. Anyhow, there is no reason for this to need to be physically attached to the network switch, and they already have a standalone router controller for SDI, so I am told that existing controllers should be able to route 2110 as well with a coming software update. Interestingly, many of Blackmagic’s new 2110 converters support PoE input to power them directly from the attached ethernet cables, but the new Blackmagic Ethernet Switch does not support PoE output. So most users will probably look towards Netgear for that, as they seem to be making a name for themselves for switching IP based video, and they can support PoE.

Where Blackmagic Design is leaning into SMPTE 2110 is with their mini convertors and a new network switch. The new mini-convertors are pretty straight forward in that they convert 2110 packets over 10GbE ports to and from SDI or HDMI. The 360P 16 port 10GbE network switch is a bit more interesting to me, in that any switch ‘should’ work for 2110, but this new one also includes a button interface on the other side to operate it like an SDI router. My understanding is that this is a totally separate device within the 1U box, which routes the multicast subscriptions via NMOS. NMOS is something I had heard of, but I have still not wrapped my head around exactly how it works. Anyhow, there is no reason for this to need to be physically attached to the network switch, and they already have a standalone router controller for SDI, so I am told that existing controllers should be able to route 2110 as well with a coming software update. Interestingly, many of Blackmagic’s new 2110 converters support PoE input to power them directly from the attached ethernet cables, but the new Blackmagic Ethernet Switch does not support PoE output. So most users will probably look towards Netgear for that, as they seem to be making a name for themselves for switching IP based video, and they can support PoE.

Adobe was more focused on AI than 2110 in their booth, which should come as no surprise to anyone following their Sensei and Firefly developments over the past few years. While no new AI driven features are arriving in their products at this moment, they are offering a look at what is coming in the future of AI for video, with the ability to create new shots or extend existing ones with AI generated frames, directly within Premiere Pro, as well as add and remove items from existing footage. If the final product is as powerful as their demos imply that it will be, we are in for quite a shift in potential workflows, especially for lower budget projects.

Adobe has also added a number of new features in Premiere Pro since version 2024 was initially released last October. Most recently coming to public beta are support for scrolling to zoom and pan the playback panels, and broader control over proxy workflows. The biggest new feature that has made it to the main release is Enhanced Speech, an AI based tool for cleaning up the audio in dialog recordings, which is startlingly ‘AI good’ in many cases. They also have more options for text styles and colored fonts, and new controls for timeline markers. Additionally, new formats that are now supported include Sony Burano footage, and export of 16bit PNG files and H.264 MOV files. After Effects has continued to add support for true 3D objects directly in the program, with beta support for animated models, and z-depth map creation, and new shadow controls.

Frame.IO, which is now part of Adobe, just released a major update as well. Version 4 has unified the sharing options with much more control over the look and feel of your presentation, more control over the UI, with all of the panels available at once for users with larger screens. They have also further refined the potential user roles for Pro accounts, and added forensic watermarking for enterprise accounts, which is a huge improvement from the previous burn in options, in my opinion. There is greater support for metadata and sorting, and customizable status settings to keep track of where media is in the approval pipeline. With Camera to Cloud, this allows Frame.IO to be used throughout the processes of a modern cloud workflow.

Canon was showing off their various cameras and huge selection of lenses. They also have PTZ cameras that apparently are getting used in productions alongside their larger cinema cameras, so they now have a software tool that helps color match the cameras, so that users can more easily cut between them on live productions. The tool analyzes the output feeds and then creates individual LUTs that can be loaded into the PTZ cameras and applied to the live output.

Canon was showing off their various cameras and huge selection of lenses. They also have PTZ cameras that apparently are getting used in productions alongside their larger cinema cameras, so they now have a software tool that helps color match the cameras, so that users can more easily cut between them on live productions. The tool analyzes the output feeds and then creates individual LUTs that can be loaded into the PTZ cameras and applied to the live output.  They were also showing off their Stereo 180 VR lenses for Mirrorless cameras. I am hoping to test out and write up the Stereo 180 VR workflow later this year, as I see that as a much more practical option than 360 Video. (Most people rarely look behind themselves in VR anyway.)

They were also showing off their Stereo 180 VR lenses for Mirrorless cameras. I am hoping to test out and write up the Stereo 180 VR workflow later this year, as I see that as a much more practical option than 360 Video. (Most people rarely look behind themselves in VR anyway.)

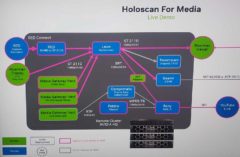

NVidia was pushing their Holoscan platform for implementing AI in media workflows. This of course includes support for 2110 IP video exchange, along with other GPU accelerated functionality. Of their many partners and applications, the one I found most interesting was their solution for taking a RAW 8K feed from a RED camera, processing it to UHD, and retransmitting it as a 2110 stream. Cinnafilm was showing there TachyonLive solution which can pick up a 2110 stream like that and apply motion compensated frame rate conversion to make the live feed available in other formats.

NVidia was pushing their Holoscan platform for implementing AI in media workflows. This of course includes support for 2110 IP video exchange, along with other GPU accelerated functionality. Of their many partners and applications, the one I found most interesting was their solution for taking a RAW 8K feed from a RED camera, processing it to UHD, and retransmitting it as a 2110 stream. Cinnafilm was showing there TachyonLive solution which can pick up a 2110 stream like that and apply motion compensated frame rate conversion to make the live feed available in other formats.  And Dell was demonstrating a Holoscan based solution for forwarding on 2110 streams to Youtube Live for wide distribution. NVidia also announced two new lower end Ampere based discrete GPU PCIe cards during the show: the RTX A400, and the RTX A1000, which has three times the processing power, and double the memory size and bandwidth. Interestingly these are based on the Ampere architecture which was first released back in 2020. But with 2304 CUDA cores, the A1000 should have three quarters the performance of the existing A2000, which might be enough for many HD users and such.

And Dell was demonstrating a Holoscan based solution for forwarding on 2110 streams to Youtube Live for wide distribution. NVidia also announced two new lower end Ampere based discrete GPU PCIe cards during the show: the RTX A400, and the RTX A1000, which has three times the processing power, and double the memory size and bandwidth. Interestingly these are based on the Ampere architecture which was first released back in 2020. But with 2304 CUDA cores, the A1000 should have three quarters the performance of the existing A2000, which might be enough for many HD users and such.

HP was showing off their new-ish mobile workstations, based on Intel’s new Meteor Lake SoC chips, which combine a CPU, GPU, and NPU (Neural Processing Unit for AI acceleration) into a single chip. These are now called ‘Core Ultra’ chips, and the professionally focused vPro variants were first released in February. Even their lightest ‘ZBook Firefly’ line now has the option of adding a dedicated GPU, in the form of the RTX A500, which was also just released. So I am excited to eventually continue my experiment of figuring out how light I can go, and still get work done effectively. Having an NVidia GPU, even if it is a weaker model, allows CUDA code execution, and HEVC and AV1 encode and decode acceleration, so that should be sufficient for most of my lighter editing projects.

LucidLink is a cloud based storage company, focused on media production. They offer shared access to a block based storage, presenting itself as a local drive on your workstation. Files are streamed from the cloud, but cached locally, at the block level, meaning that if you only need a few frames of a large asset in your project, you don’t have to download the entire file, only the part you need, all of which is handled automatically. I started using LucidLink last year once I was able to upgrade my home internet from 6Mb to 300Mb, allowing reasonable speeds for cloud based workflows. LucidLink announced at the show upcoming support for iOS and Android access to cloud files, as well as browser based uploads and downloads for systems that don’t have their full client installed. They will also be adding the option for public links to assets so that users can share their files with non-users. As an existing user of their service, I am excited to see what is coming.

LucidLink is a cloud based storage company, focused on media production. They offer shared access to a block based storage, presenting itself as a local drive on your workstation. Files are streamed from the cloud, but cached locally, at the block level, meaning that if you only need a few frames of a large asset in your project, you don’t have to download the entire file, only the part you need, all of which is handled automatically. I started using LucidLink last year once I was able to upgrade my home internet from 6Mb to 300Mb, allowing reasonable speeds for cloud based workflows. LucidLink announced at the show upcoming support for iOS and Android access to cloud files, as well as browser based uploads and downloads for systems that don’t have their full client installed. They will also be adding the option for public links to assets so that users can share their files with non-users. As an existing user of their service, I am excited to see what is coming.

Those are the highlights of my two day trip to Vegas for the show. Of course there are thousands of other products and technologies on display across four huge halls, but these were the ones that seemed most relevant to my workflows, and my perspective on the world of post production. It will be interesting to see how AI based solutions mature in the market, and how IP video comes into its own as more companies start to create solutions that can be linked together via 2110 or the other competing options.