Adobe held their annual MAX conference at the LA Convention Center this week. It was my first time back since Covid, but they did host an in-person event last year as well. The MAX conference is focused on Creativity, and is traditionally where Adobe announces and releases the newest updates to their Creative Cloud apps. As a Premiere editor, and of course a Photoshop user, I am always interested in seeing what Adobe’s team has been doing to improve their products, and improve my workflows. I have followed Premiere and After Effects pretty closely through the beta programs for over a decade, but MAX is where I find out about what new things I can do in Photoshop, Illustrator, and various other apps. (And via the various sessions I also learn some old things I can do as well, that I just didn’t know about yet.)

The main Keynote is generally where Adobe announces new products and initiatives, as well as new functions to their existing applications. This year was very AI focused, following up their successful ‘Firefly’ generative AI imaging tool released earlier in the year. The main feature that Adobe uses to differentiate their generative AI tools from the various competing options, is that due to their ownership of the content the models are trained on (presumably courtesy of Adobe Stock), the resulting outputs are guaranteed to be safe to use in commercial projects. Adobe sees AI as useful in four ways: broadening exploration, accelerating productivity, increasing creative control, and including community input. Adobe GenStudio will now be the hub for all things AI, integrating Creative Cloud, Firefly, Express, Frame.io, Analytics, AEM Assets and Workfront. It aims to ‘enable on-brand content creation at the speed of imagination.’

The main Keynote is generally where Adobe announces new products and initiatives, as well as new functions to their existing applications. This year was very AI focused, following up their successful ‘Firefly’ generative AI imaging tool released earlier in the year. The main feature that Adobe uses to differentiate their generative AI tools from the various competing options, is that due to their ownership of the content the models are trained on (presumably courtesy of Adobe Stock), the resulting outputs are guaranteed to be safe to use in commercial projects. Adobe sees AI as useful in four ways: broadening exploration, accelerating productivity, increasing creative control, and including community input. Adobe GenStudio will now be the hub for all things AI, integrating Creative Cloud, Firefly, Express, Frame.io, Analytics, AEM Assets and Workfront. It aims to ‘enable on-brand content creation at the speed of imagination.’

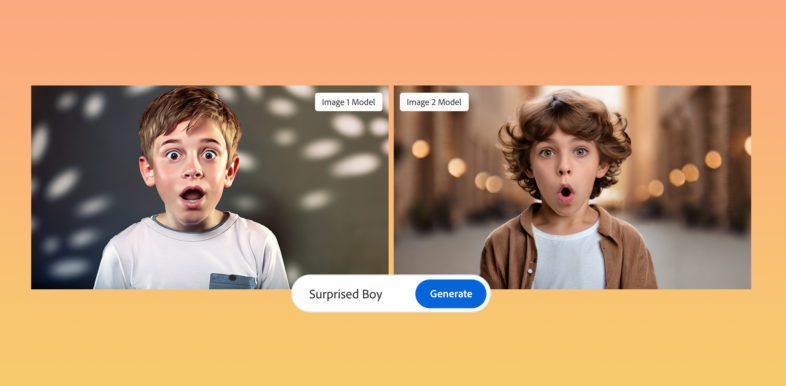

Adobe has three new generative AI models: Firefly Image 2, Firefly Vector, and Firefly Design. They also announced that they are working on Firefly Audio, Video, and 3D models, which should be available soon. I want to pair the 3D one with the new AE functionality. Firefly Image 2 has twice the resolution of the original, and can ingest reference images to match the style of the output to.

Firefly Vector is obviously for creating AI generated vector images and art.

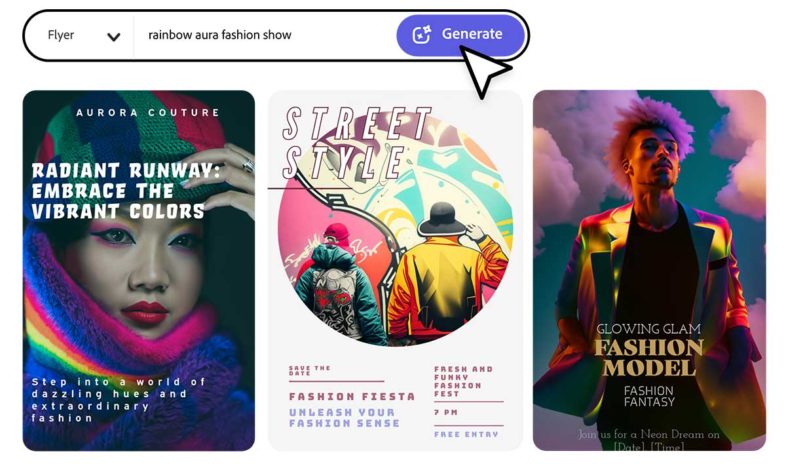

But the third one, FireflyDesign, deserves further explanation. It generates a fully editable Adobe Express template document with a user defined aspect ratio and text options. The remaining fine tuning for a completed work can be done in Adobe Express.

For those of you unfamiliar, Adobe Express is a free cloud based media creation and editing application, and that is where a lot of Adobe’s recent efforts and this event’s announcements have been focused. It is designed to streamline the workflow for getting content from the idea stage all the way to publishing on the internet, with direct integration to many various social media outlets, and a full scheduling system to manage entire social marketing campaigns. It can reformat content for different deliverables, and even automatically translate it into 40 different languages. As more and more of Photoshop and Illustrator’s functionality gets integrated into Express, it will probably begin to replace them as the go-to for entry level users. And as a cloud based app accessed through a browser, it can even be utilized on Chromebooks and other non-Mac and Windows devices. And they claim that via a partnership with Google, the Express browser extension will be included in all new Chromebooks moving forward.

Photoshop for Web is the next step beyond Express, integrating even more of the application’s functions into a cloud app that can be accessed from anywhere, once again, also on Chrome devices. I am apparently an old-school guy who has not yet embraced the move to the Cloud as much as I could have, but my dissatisfaction with the direction the newest Microsoft and Mac OSes are going, maybe browser based applications are the future.

Similarly, as a finishing editor, I have real trouble posting content that is not polished and perfected, but that is not how social media operates. With much higher amounts of content being produced in narrow timeframes, most of which would not meet the production standards I am used to, I have not embraced the new paradigm. Which is why I am writing an article about this event, and not posting a video about it, which I would have to spent far too much time reframing each shot, color correcting, and cleaning up any distractions in the audio.

In regards to desktop applications, within the full version of Photoshop, Firefly powered generative fill has replaced content-aware fill. You can now use generative fill to create new overlay layers based on text prompts, or remove things by overlaying AI generated background extensions. AI can also add reflections and other image processing. It can ‘un-crop” images via Generative Expand. Separately, gradients are now fully editable, and there are now adjustment layer presets, including user definable ones.

Illustrator can now identify fonts in rasterized and vectorized images, and can even edit text that has already been converted to outlines. It can convert text to color palettes for existing artwork. It can also AI generate vector objects and scenes, which are all fully editable and scalable. It can even take in existing images as input to match to stylistically. There is also a new cloud based web version of Illustrator coming to public beta.

From the video perspective, the news was mostly familiar to existing public beta users, or those who followed the IBC announcements. Text based editing, pause and filler word removal, and dialog enhancement in Premiere Pro. After Effects is getting true 3D object support, so learning more about the workflows for utilizing that feature were the focus of my session schedule. You need models to be created and textured, and saved as GLB files, before they can be used in AE. And you need to setup the lighting environment in AE before they are going to look correct in your scene. But I am looking forward to being able to use that functionality more effectively on my upcoming film post-viz projects.

I will detail my experience at Wednesday’s Inspiration Keynote, and the tips in tricks that I learned in the various training sessions that I attended in a separate posting in a few days, as the conference is still wrapping up on Thursday. But I wanted to share the more time sensitive product announcements with readers before the show ended. So stay keep an eye out for the second half of my MAX coverage soon.