Adobe held their MAX Creativity Conference this week, hosted in Miami for the first time. This led to some concern due to the recently occurring Hurricane Milton, but the event was held as scheduled, a few days after the storm ravaged the state. So thousands of people from across the US and around the world descended on the Miami Beach Convention Center to learn more about upcoming technological developments in Adobe’s new software, and be inspired by the work of fellow artists and Adobe users.

Adobe has donated $1M to Red Cross and has promised to match any further donations from both employees and attendees. They stated that local officials requested they not cancel the event, because the area would benefit from the tourism dollars as they recover from the storm. I expected a lower turnout due to the storm, and because Miami was ‘so far away.’ And while I saw fewer LA and California faces that I recognized, the fact that this was the first MAX on the east coast appears to have led to a surge of first time attendees, from that side of the county, with total attendance of over 10,000 people.

Opening Keynote

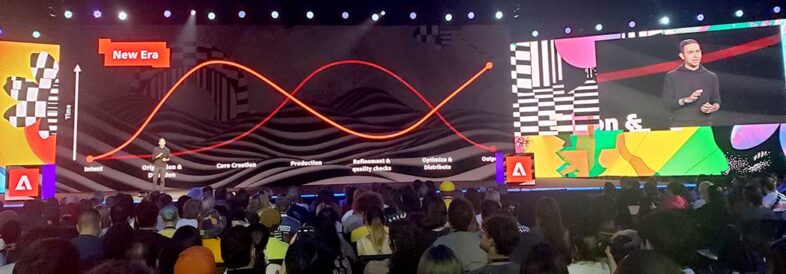

The conference kicked off with a Keynote on Monday morning, which was unsurprisingly focused on Generative AI. Adobe highlighted their new AI developments, and how their approach to AI data and training is more responsible than most of their competitors. Adobe only trains their AI engines on content that they have license to, instead of scraping the internet for publicly accessible data. And they tag content that has been modified in their applications by Generative AI tools, via their Content Authenticity Initiative, which they have been pushing since 2019. Adobe is pushing the idea that AI should be a useful tool for artists, not a replacement for artists, which is a message that resonates well with this audience of ‘creatives.’

The conference kicked off with a Keynote on Monday morning, which was unsurprisingly focused on Generative AI. Adobe highlighted their new AI developments, and how their approach to AI data and training is more responsible than most of their competitors. Adobe only trains their AI engines on content that they have license to, instead of scraping the internet for publicly accessible data. And they tag content that has been modified in their applications by Generative AI tools, via their Content Authenticity Initiative, which they have been pushing since 2019. Adobe is pushing the idea that AI should be a useful tool for artists, not a replacement for artists, which is a message that resonates well with this audience of ‘creatives.’

One of the main features of the MAX conference, is as a forum for Adobe to share their newest innovations and next generation of software releases for their Creative Cloud applications. This week saw the release of the next version of many applications, including Premiere Pro, Photoshop, and Illustrator. But because Creative Cloud’s subscription model allows for a constant trickle of features to be released in small updates throughout the year, instead of a major update to convince users to buy an updated version, the focus wasn’t necessarily on features being released this week. The keynote presentations of individual applications spent considerable time reviewing features that had already been released in minor updates over the last few months, and showing functionality that still hasn’t hit the public betas yet, but they claim is coming soon. This was especially true for the video tools, since Firefly for Video (Adobe’s Gen-AI toolset for video) is just hitting private beta, and is not quite ready for the general public. But Adobe clearly wanted to show off the exciting improvements that are coming, primarily to Premiere Pro, as After Effects wasn’t even mentioned. And not all applications of AI technology are necessarily ‘generative,’ as AI could be used to parse existing content in various ways, instead of generating anything new. This could be searching existing footage to automatically tag various objects or actions, or it could be isolating various objects within a shot, like Premiere Pro’s upcoming Object Selection Tool, which was demonstrated during the keynote. But the generative AI tools are the flashier features to show off and talk about. Firefly’s upcoming still-to-video capability will combine a source image and a text prompt to ‘animate’ a still image into a video asset. Other tools will use Gen-AI to add objects to existing footage, or remove objects, requiring replacement background to be generated.

One of the main features of the MAX conference, is as a forum for Adobe to share their newest innovations and next generation of software releases for their Creative Cloud applications. This week saw the release of the next version of many applications, including Premiere Pro, Photoshop, and Illustrator. But because Creative Cloud’s subscription model allows for a constant trickle of features to be released in small updates throughout the year, instead of a major update to convince users to buy an updated version, the focus wasn’t necessarily on features being released this week. The keynote presentations of individual applications spent considerable time reviewing features that had already been released in minor updates over the last few months, and showing functionality that still hasn’t hit the public betas yet, but they claim is coming soon. This was especially true for the video tools, since Firefly for Video (Adobe’s Gen-AI toolset for video) is just hitting private beta, and is not quite ready for the general public. But Adobe clearly wanted to show off the exciting improvements that are coming, primarily to Premiere Pro, as After Effects wasn’t even mentioned. And not all applications of AI technology are necessarily ‘generative,’ as AI could be used to parse existing content in various ways, instead of generating anything new. This could be searching existing footage to automatically tag various objects or actions, or it could be isolating various objects within a shot, like Premiere Pro’s upcoming Object Selection Tool, which was demonstrated during the keynote. But the generative AI tools are the flashier features to show off and talk about. Firefly’s upcoming still-to-video capability will combine a source image and a text prompt to ‘animate’ a still image into a video asset. Other tools will use Gen-AI to add objects to existing footage, or remove objects, requiring replacement background to be generated.

Other new features that were highlighted were Photoshop new tools for removing ‘distractions’ from shots including wires, cables, and people. Illustrator is getting enhanced image trace, presumably informed by AI, and ‘objects on path,’ which looks more revolutionary than its name implies. Adobe Express can now import PSD files, as well as Illustrator and InDesign documents.

They introduced Project Concept, which is a new browser based multi-user shared canvas for ideating AI prompts and assets from FireFly. And Project Neo is a new 3D modeling tool aimed at Illustrator users and vector artists, for creating simple 3D assets.

The best breakout session I attended, by far, was AJ Bleyer’s Shooting for Generative AI, where he demonstrated a bunch of practical ways that Photoshop’s existing Gen-AI tools can be used for cleanup, set extension, or full on static object addition, for locked off video shots. He then recommends adding camera motion in post, to make these locked off shots more dynamic and believable. His techniques were simple but creative, giving stunning results that feel very achievable. Eventually much of what he was demonstrating will be included within Premiere, but what he showed can be used today. I am looking forward to experimenting with some of his ideas as soon as I get back home.

The best breakout session I attended, by far, was AJ Bleyer’s Shooting for Generative AI, where he demonstrated a bunch of practical ways that Photoshop’s existing Gen-AI tools can be used for cleanup, set extension, or full on static object addition, for locked off video shots. He then recommends adding camera motion in post, to make these locked off shots more dynamic and believable. His techniques were simple but creative, giving stunning results that feel very achievable. Eventually much of what he was demonstrating will be included within Premiere, but what he showed can be used today. I am looking forward to experimenting with some of his ideas as soon as I get back home.

I had the opportunity to talk to Adobe representatives from the FrameIO and Premiere Pro marketing teams about the newest features they have been releasing. FrameIO has there big Version 4 update coming out of beta and being rolled out to all users. This brings a new faster UI with more options, customizable asset collections and metadata, and a new link to Lightroom, to further accelerate Camera2Cloud workflows. They also announced Camera2Cloud partnerships with Canon, Nikon, and Leica, which covers a majority of the camera manufacturers. Now Canon is the only one I care about personally, as I am a Canon user, but initial support will be limited to the new C400 and C80 Cinema EOS cameras, with a focus on video instead of stills.

Premiere Pro has Generative Extend hitting public beta this week, which allows editors to extend the first or last frame of a clip with up to two seconds of artificially generated visual content, and up to 10 seconds of audio. This is a first step into generative AI video, as the Firefly text to video and image to video are not publicly available yet. Premiere also has a new wide gamut color management system coming, but that is still in beta, and was not put into this week’s general release of version 25 of the application. But the new spectrum UI and updated audio editing features are all now included in the main version of the software. I expect to see lots more significant updates showing up in Premiere in the next few months.

Premiere Pro has Generative Extend hitting public beta this week, which allows editors to extend the first or last frame of a clip with up to two seconds of artificially generated visual content, and up to 10 seconds of audio. This is a first step into generative AI video, as the Firefly text to video and image to video are not publicly available yet. Premiere also has a new wide gamut color management system coming, but that is still in beta, and was not put into this week’s general release of version 25 of the application. But the new spectrum UI and updated audio editing features are all now included in the main version of the software. I expect to see lots more significant updates showing up in Premiere in the next few months.

Inspiration Keynote

Tuesday morning started out with the “Inspiration Keynote,” which opened with a live musical performance by Yoli Mayor, who is from here in Miami. This was followed by a presentation from Adobe’s Scott Belsky, about how AI is going from what he described as the initial primitive ‘prompt era’ to the emerging more sophisticated ‘controls era’ where artists have more direct input and control over what the AI system generates.

He also announced Substance 3D viewer, a new standalone app as well as an extension of Photoshop, another way of integrating 3D assets into 2D work. He also highlighted developments in Behance evolving into a marketplace for artists and artistic work. Besides portfolios of digital art, it is a way of connecting artists with projects and work, and even for selling their art and other digital assets directly on the site, via Behance Pro as a paid subscription. He also highlighted an Adobe partnership with Creative Mornings, an organization that hosts free creativity events in over 200 cities. He talked about artistic attribution as another benefit of content credentials, and that those credentials may become significant proof of human creation in an age of digital transformations via AI. Adobe’s Stacy Martinet introduced three artists who each shared some of their own creative process and journey.

He also announced Substance 3D viewer, a new standalone app as well as an extension of Photoshop, another way of integrating 3D assets into 2D work. He also highlighted developments in Behance evolving into a marketplace for artists and artistic work. Besides portfolios of digital art, it is a way of connecting artists with projects and work, and even for selling their art and other digital assets directly on the site, via Behance Pro as a paid subscription. He also highlighted an Adobe partnership with Creative Mornings, an organization that hosts free creativity events in over 200 cities. He talked about artistic attribution as another benefit of content credentials, and that those credentials may become significant proof of human creation in an age of digital transformations via AI. Adobe’s Stacy Martinet introduced three artists who each shared some of their own creative process and journey.

Devon Rodriguez is known for his videos about making portraits of people on the New York subway. He spent many years studying art before becoming an overnight sensation on TikTok, and is now the most ‘followed’ visual artist on the internet. His advice was to do what you love, but make sure you have a deliberate effort to market your work.

Devon Rodriguez is known for his videos about making portraits of people on the New York subway. He spent many years studying art before becoming an overnight sensation on TikTok, and is now the most ‘followed’ visual artist on the internet. His advice was to do what you love, but make sure you have a deliberate effort to market your work.

Emonee LaRussa is a cinematographer and motion graphics artist, who loves making music videos. She highlighted the value of believing in yourself, but to have evidence to back up that belief. She talked about structured time management, and taking breaks to maximize productivity. And to set aside dedicated time for learning and inspiration. She also promoted vision boards as a tool for effectively manifesting your dreams and ideas.

Emonee LaRussa is a cinematographer and motion graphics artist, who loves making music videos. She highlighted the value of believing in yourself, but to have evidence to back up that belief. She talked about structured time management, and taking breaks to maximize productivity. And to set aside dedicated time for learning and inspiration. She also promoted vision boards as a tool for effectively manifesting your dreams and ideas.

Jason Naylor is an artist from Salt Lake City, who was raised Mormon, but doesn’t fit within that box. He is a painter, focused on large murals and other street art. He talked about the importance of message, and how the way art makes you feel is more important than how it ‘looks.’ Basically things need to have a point, instead of just looking cool. That is what probably separates good artists from the rest, in a universal sense. The open heart is his icon, representing that there is always room for more love. And his advice was to be yourself as opposed to trying to fit in or be normal.

Jason Naylor is an artist from Salt Lake City, who was raised Mormon, but doesn’t fit within that box. He is a painter, focused on large murals and other street art. He talked about the importance of message, and how the way art makes you feel is more important than how it ‘looks.’ Basically things need to have a point, instead of just looking cool. That is what probably separates good artists from the rest, in a universal sense. The open heart is his icon, representing that there is always room for more love. And his advice was to be yourself as opposed to trying to fit in or be normal.

The Inspiration Keynote concluded with a presentation of Digital Edge Awards for innovative students, and Adobe MAX Creativity Awards for professional designers.

Creativity Park

I spent the afternoon in the Creativity Park, touring booths from Adobe and many of their partners, including AMD, Dell, HP, and many others. Logitech was showing off their new MX Creative Console, which is like a Loupedeck CT lite, for one third the price. It is very targeted at Adobe users, with dedicated plugins for many of the Creative Cloud applications, and I look forward to trying it out. SanDisk was showing off a new Creator line of products targeting the prosumer ‘streamer’ demographic, that was basically all of their existing products rebranded in a sky blue color. Gatorade had a booth where they were creating custom water bottles for attendees, based on Firefly Gen-AI designs.

I spent the afternoon in the Creativity Park, touring booths from Adobe and many of their partners, including AMD, Dell, HP, and many others. Logitech was showing off their new MX Creative Console, which is like a Loupedeck CT lite, for one third the price. It is very targeted at Adobe users, with dedicated plugins for many of the Creative Cloud applications, and I look forward to trying it out. SanDisk was showing off a new Creator line of products targeting the prosumer ‘streamer’ demographic, that was basically all of their existing products rebranded in a sky blue color. Gatorade had a booth where they were creating custom water bottles for attendees, based on Firefly Gen-AI designs.

Dell was showing a Snapdragon based ARM laptop, which is getting wider native support from Adobe apps. HP was showing a new Omen ‘gaming’ monitor that also includes their traditionally professional Dreamcolor functionality. The UHD resolution OLED display supports HDR and high frame rates up to 240hz. It also supports G-Sync and has a built in KVM, so it looks to be a pretty well rounded solution for the most discerning users. The positioning of that display as a gaming device is representative of the audience for the entire show. Branded as a conference for ‘creatives’ it is not targeting the stuffy traditional corporate types, in favor of the younger ‘new media’ influencers and YouTubers.

MAX Sneaks 2024

Tuesday afternoon was capped off with the Adobe Sneaks presentation, where various Adobe engineers show off bleeding edge technology research projects that haven’t necessarily been developed into software applications yet. It serves to get users excited about what may be coming in the mid range future, and the demonstrations vary greatly. This year there were nine, mostly focused on new implementations and controls for generative AI. These were my favorites:

Tuesday afternoon was capped off with the Adobe Sneaks presentation, where various Adobe engineers show off bleeding edge technology research projects that haven’t necessarily been developed into software applications yet. It serves to get users excited about what may be coming in the mid range future, and the demonstrations vary greatly. This year there were nine, mostly focused on new implementations and controls for generative AI. These were my favorites:

Project Remix A Lot can convert a sketch to an editable AI vector, and it can ‘remix’ canvases to different shapes and aspect ratios, or mix old designs with new content

Project Perfect Blend allows for automated compositing in Photoshop, to harmonize shots with different lighting. It adds shadows and reflections, and corrects temperature and exposure to match layers seamlessly.

Project Turntable allows users to create alternate perspectives of 2D vector art, allowing them to be rotated horizontally or vertically, with gen-AI filling in the newly revealed details.

MAX Bash Sneaks was followed by MAX Bash, a big party and a concert featuring performer T-Pain. This used to be a combined event, with food and music together at a large outdoor venue, but this year the food and celebration were outside the hall while the main auditorium was converted from a vast seat of folding chairs for the presentations, into a concert venue, for a performance that followed. That wrapped the main events for MAX, while Wednesday was lower key, with various sessions to check out, and a last opportunity to visit the booths of the Creativity Park. I don’t know if they will be moving MAX back to LA next year, but they did announce that the next MAX event will be in Tokyo next February.

Sneaks was followed by MAX Bash, a big party and a concert featuring performer T-Pain. This used to be a combined event, with food and music together at a large outdoor venue, but this year the food and celebration were outside the hall while the main auditorium was converted from a vast seat of folding chairs for the presentations, into a concert venue, for a performance that followed. That wrapped the main events for MAX, while Wednesday was lower key, with various sessions to check out, and a last opportunity to visit the booths of the Creativity Park. I don’t know if they will be moving MAX back to LA next year, but they did announce that the next MAX event will be in Tokyo next February.