As the first product coming to market featuring NVidia’s new Ada Lovelace architecture, the GeForce 4090 graphics card has a host of new features to test out. With DLSS3 for gaming, AV1 encoding for video editors and streamers, and ray tracing and AI rendering for 3D animators, there are new options available for a variety of different potential users. While the GeForce line of video cards has historically been targeted towards computer gaming, NVidia knows that they are also valuable tools for content creators, and a number of new features are designed especially for those users.

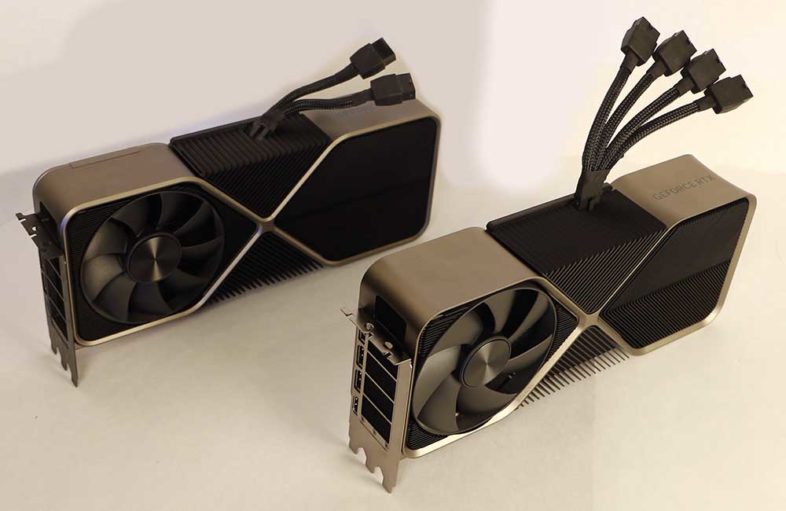

The New Graphics Card Like its predecessor, the Ampere based 3090, the new GeForce 4090 Founder’s Edition card itself is a behemoth, taking up 3 PCIe slots, and exceeding the full height standard, so you will need a large case. While at first glance it looks like NVidia just dropped the new card into the previous generation shroud and cooling solution, upon closer inspection it becomes obvious that this is not true. While they continued to use the same overall design approach, they have adjusted and improved it in nearly every way.

Like its predecessor, the Ampere based 3090, the new GeForce 4090 Founder’s Edition card itself is a behemoth, taking up 3 PCIe slots, and exceeding the full height standard, so you will need a large case. While at first glance it looks like NVidia just dropped the new card into the previous generation shroud and cooling solution, upon closer inspection it becomes obvious that this is not true. While they continued to use the same overall design approach, they have adjusted and improved it in nearly every way.  Most significantly, the 4090 is 1/4″ shorter the 3090, which is important because I have repeatedly found that card to be slightly too long for a number of cases, to the point of scratching the edge of my card to fit into spots where it is too tight. It makes up for that by being a 1/4″ thicker than the 3090. The size allows NVidia to use larger fans on the card (116mm instead of 110mm), which are now counter-rotating, with fewer blades, and with better fluid dynamic bearings, all to increase airflow and minimize fan noise, which they appear to me to have succeeded in.

Most significantly, the 4090 is 1/4″ shorter the 3090, which is important because I have repeatedly found that card to be slightly too long for a number of cases, to the point of scratching the edge of my card to fit into spots where it is too tight. It makes up for that by being a 1/4″ thicker than the 3090. The size allows NVidia to use larger fans on the card (116mm instead of 110mm), which are now counter-rotating, with fewer blades, and with better fluid dynamic bearings, all to increase airflow and minimize fan noise, which they appear to me to have succeeded in.

Power Requirements

You will also need a large power supply to support the card’s energy consumption, which peaks at 450Watts. NVidia recommends at least an 850W power supply, so my Fractal Design 860W unit just barely qualifies.  All the new cards use a new 12 pin PCIe 5.0 power connector, which is similar to the connector on the Ampere cards, but with slight differences, including additional signaling pins. While the previous card came with an adapter to direct the current from two 8-Pin plugs into the card, the new cards include an adapter to harness the power from up to four 8-Pin PCIe power plugs, although only three are absolutely required. The lower tier GeForce 4080 cards will have lower power requirements, but utilize the same plug. Eventually this new plug should become a standard feature on new high end power supplies, simplifying the process of powering the card.

All the new cards use a new 12 pin PCIe 5.0 power connector, which is similar to the connector on the Ampere cards, but with slight differences, including additional signaling pins. While the previous card came with an adapter to direct the current from two 8-Pin plugs into the card, the new cards include an adapter to harness the power from up to four 8-Pin PCIe power plugs, although only three are absolutely required. The lower tier GeForce 4080 cards will have lower power requirements, but utilize the same plug. Eventually this new plug should become a standard feature on new high end power supplies, simplifying the process of powering the card.

The one thing I don’t like about the new plug on the cards is that by having it sticking directly out of the top of the card, it further increases the minimum case size rather dramatically. I imagine they did this because coming out the end of the card would interfere with hard drive bays and cooling systems in many cases, and because the underlying printed circuit board doesn’t extend past the current connector location. This has the result of implicitly requiring a larger volume system chassis, which should increase air cooling efficiency. The card does fit fine in my case, because I was prepared for it, after the 3090 didn’t fit in my main system two years ago, but I don’t think the current power cable solution is very elegant or ideal.

The new cards all still use a 16 lane PCIe 4.0 interface, because they still don’t currently saturate the available bandwidth of that connection to justify the expense of utilizing the emerging PCIe 5.0 standard that is available on new motherboards. The only time I could imagine that being an issue, is when the connection is being shared between two GPU in two 8 lane slots, but that multi-card approach to increasing performance is falling out of style with consumer systems. Part of the reason for that is because of the complexity of implementing SLI or Crossfire at the application level, but more significantly, individual GPU performance scales much higher than it used to. Similar to current CPU options, the high-end options now scale to much greater performance than most users will ever be able to fully utilize. To that end there has been no mention NV-Link or similar technologies to harness the power of multiple 40-series GPUs. This removes most of the need to harness the power of multiple separate chips or cards to increase performance, simplifying the end solution. And the new GPU options scale up really high, with the new Ada Lovelace based chips inside this new cards being up to twice as powerful as the previous Ampere chips.

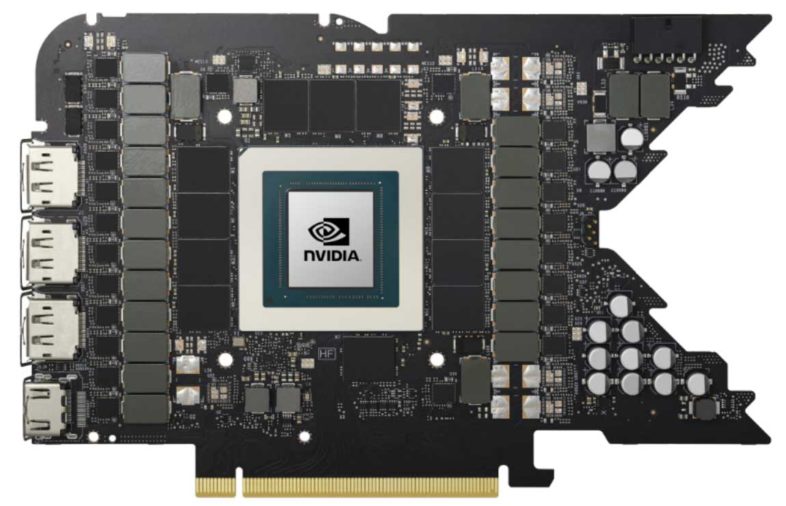

The ADA Lovelace Chip

The GeForce 4090 as the current top product in the new lineup has 16384 CUDA cores, at 4nm, running at 2.5Ghz, which greatly exceeds the previous generation GeForce 3090’s count of 10496 cores maxing out at 1.7Ghz. It has over 3x as many transistors at 76 over billion, and 12x the L2 cache of the previous version, with 72MB on the 4090. The memory configuration is similar to the 3090, with 24GB running at 1TB/s, but it now uses lower power memory chips. The other big change since the last generation is the 8th generation NvEnc hardware video encoder which now supports AV1 encoding acceleration. And now with dual encoders operating in parallel, content up to 8Kp60 can be encoded in real-time for high resolution streaming. More details on the AV1 encoding support are below, in the video editing section.

The GeForce 4090 as the current top product in the new lineup has 16384 CUDA cores, at 4nm, running at 2.5Ghz, which greatly exceeds the previous generation GeForce 3090’s count of 10496 cores maxing out at 1.7Ghz. It has over 3x as many transistors at 76 over billion, and 12x the L2 cache of the previous version, with 72MB on the 4090. The memory configuration is similar to the 3090, with 24GB running at 1TB/s, but it now uses lower power memory chips. The other big change since the last generation is the 8th generation NvEnc hardware video encoder which now supports AV1 encoding acceleration. And now with dual encoders operating in parallel, content up to 8Kp60 can be encoded in real-time for high resolution streaming. More details on the AV1 encoding support are below, in the video editing section.

New Software and Tools

Many of the newest functions available with Ada hardware are unlocked through the new software developments NVidia has been making. The biggest one, most relevant to gamers, is DLSS 3.0, which stands for Deep Learning Super Sampling.  While DLSS 2 utilized AI Super Resolution to decrease the number of pixels that needed to be rendered in 3D, by intelligently up-scaling the lower resolution result, DLSS 3 takes this a step farther to use AI based Optical Multi-Frame Generation to generate entirely new frames displayed between the existing rendered ones. This process is hardware accelerated by Ada’s 4th generation Tensor Cores and a dedicated Optical Flow Accelerator. DLSS 3 uses AI generated interpolated frames to double frame rate, even for CPU bound games like MS Flight Sim. With both optimizations enabled, 7 of every 8 pixels onscreen was generated by the AI engine, not the 3D rendering engine. This does lead me to wonder: does it scale the frame or double the frame rate first? I am going to guess the frame rate so there is less total data to sort through for the interpolation process, but I don’t actually know.

While DLSS 2 utilized AI Super Resolution to decrease the number of pixels that needed to be rendered in 3D, by intelligently up-scaling the lower resolution result, DLSS 3 takes this a step farther to use AI based Optical Multi-Frame Generation to generate entirely new frames displayed between the existing rendered ones. This process is hardware accelerated by Ada’s 4th generation Tensor Cores and a dedicated Optical Flow Accelerator. DLSS 3 uses AI generated interpolated frames to double frame rate, even for CPU bound games like MS Flight Sim. With both optimizations enabled, 7 of every 8 pixels onscreen was generated by the AI engine, not the 3D rendering engine. This does lead me to wonder: does it scale the frame or double the frame rate first? I am going to guess the frame rate so there is less total data to sort through for the interpolation process, but I don’t actually know.

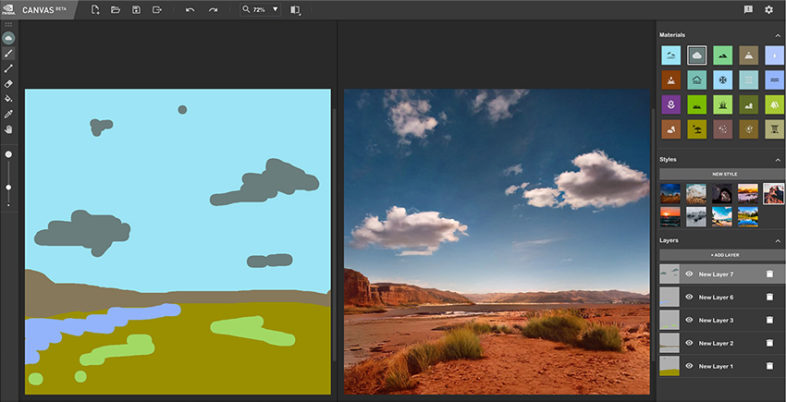

NVidia’s other software advances are applicable to more than just increasing computer game frame rates. NVidia Canvas is a new locally executed version of their previously cloud hosted GauGAN application. Now there are a wider variety of controls and features, and everything is processed locally on your own GPU. It is available for free for any RTX user.

NVidia’s other software advances are applicable to more than just increasing computer game frame rates. NVidia Canvas is a new locally executed version of their previously cloud hosted GauGAN application. Now there are a wider variety of controls and features, and everything is processed locally on your own GPU. It is available for free for any RTX user.

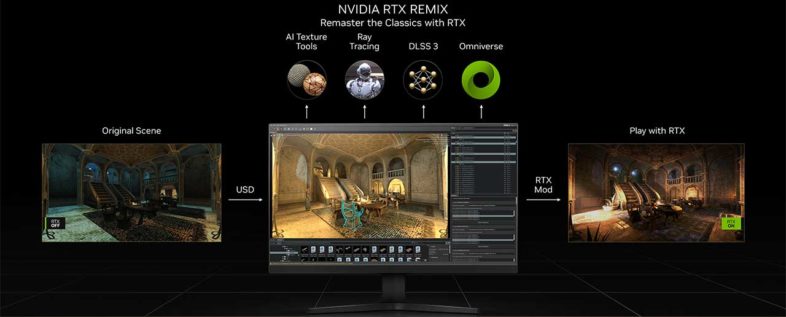

NVidia Remix is their toolset for the game modding community, using some very innovative approaches and technology to add Ray-tracing support to older titles, and other hardware level approaches to capture and export geometry and other 3D data to Omniverse’s USD format, regardless of its source type. While I don’t do this type of work, I do play older games, so I am looking forward to see if anyone brings these new technologies to bear on the titles I play.

NVidia Remix is their toolset for the game modding community, using some very innovative approaches and technology to add Ray-tracing support to older titles, and other hardware level approaches to capture and export geometry and other 3D data to Omniverse’s USD format, regardless of its source type. While I don’t do this type of work, I do play older games, so I am looking forward to see if anyone brings these new technologies to bear on the titles I play.

In addition, NVidia Broadcast was introduced two years ago to utilize AI and hardware acceleration to clean up and modify in real-time the audio and video streams of online streamers and even tele-conference participants. It utilizes GPU hardware to do background noise removal and processing, visual background replacement, and motion tracking of computer microphone and webcam data streams. Once again, via some creative virtual drivers, they are able to do it in a way that is automatically compatible with nearly every webcam application.

In addition, NVidia Broadcast was introduced two years ago to utilize AI and hardware acceleration to clean up and modify in real-time the audio and video streams of online streamers and even tele-conference participants. It utilizes GPU hardware to do background noise removal and processing, visual background replacement, and motion tracking of computer microphone and webcam data streams. Once again, via some creative virtual drivers, they are able to do it in a way that is automatically compatible with nearly every webcam application.

Real World Performance

So that is a lot of details about this new card, and the chip inside of it, but that still leaves the question: how fast is this card? For gaming, it offers more than twice the frame rates in DLSS3 supporting applications, like the upcoming release of Microsoft Flight Simulator. I was able to play smoothly at full resolution on my 8K monitor with the graphics settings at maximum, and was getting over 100fps in 4K with AI frame generation enabled. The previous flagship 3090 allowed 8K at low quality settings, and about 50fps at 4K with maximum graphics settings, so this is a huge improvement.

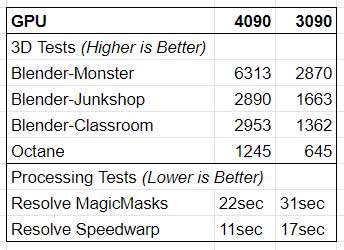

For content creation, the biggest improvements will be felt by users working in true 3D. The Blender UI feels dramatically different with the 4090 than it does with the 3090’s pixelated free-view, when full render is enabled for the viewport.

For content creation, the biggest improvements will be felt by users working in true 3D. The Blender UI feels dramatically different with the 4090 than it does with the 3090’s pixelated free-view, when full render is enabled for the viewport.  Both the Blender and Octane render benchmarks report double the render performance with the 4090 compared to the previous 3090. So that is a massive increase in performance for users of 3D applications that can fully utilize the CUDA and Optix acceleration.

Both the Blender and Octane render benchmarks report double the render performance with the 4090 compared to the previous 3090. So that is a massive increase in performance for users of 3D applications that can fully utilize the CUDA and Optix acceleration.

For video editors, the results are a little less clear cut. DaVinci Resolve has a lot of newer AI powered features, which run on the GPU, so many of these functions are about 30% faster with the newer hardware. This could be significant for users who frequently use these tools like cut detection, auto framing, magic mask, or AI speed processing. This performance increase is in addition to the new AV1 encoding acceleration in NvEnc, which will significantly speed up exports to that format. The improvements in Premiere Pro are much more subtle, where AV1 acceleration can be harnessed via the upcoming Voukoder encoding plugin. But most Adobe users won’t see huge performance or functionality improvements with these new cards without further software updates, specifically updates that allow native import and export of AV1 files.

AV1

AV1

AV1 is a relatively new codec, which is intended to improve upon and in many cases replace HEVC. It is higher quality, at lower bitrates, which is important to those of us with limited internet bandwidth, and has no licensing fees, which should accelerate support and adoption. The only real downside to AV1 is the encode and decode complexity, which is where hardware acceleration comes into play. The 30 series Ampere cards introduced support for accelerated AV1 decoding, which allows people to play back AV1 files from Youtube or Netflix smoothly, but this card is the first with the 8th generation NvEnc engine that now supports hardware accelerated encoding of AV1 files. This can be useful for streaming applications like OBS and Discord, for renders and exports from applications like Resolve and Premiere, and even for remote desktop tools like Parsec, which run on NvEnc. I for one am looking forward to the improved performance and possibilities that AV1 has to offer as it gets integrated into more products and tools. At higher bitrates, AV1 is not significantly better than HEVC, but at lower bitrates, it makes a huge difference. This review was my first hands on experimentation with the codec, and I found the 5Mb data rates were sufficient to capture UHDp60 content, while 2Mb was usually enough for 24fps UHD content I was rendering. I would usually recommend twice those data-rates for HEVC encoding, so that is a significant reduction in bandwidth requirements. The future is here.

If you are doing true 3D animation and rendering work in an application that supports ray tracing or AI denoising, the additional processing power in this new chip with probably change your life. For most other people, especially video editors, it is probably overkill. A previous generation card will do most of what you need, at what is hopefully now a lower price. But if you need AV1 encoding or a few of the other new features, it will probably be worth it to spring for a newest generation card, just maybe not the top one in the lineup. But for those who want the absolute fastest GPU available in the world, there is no doubt, this is it. There are no downsides, it is only a matter of can you justify the price, and do you have a system that you can fit it into? But it is screaming fast, and full of new features.

If you are doing true 3D animation and rendering work in an application that supports ray tracing or AI denoising, the additional processing power in this new chip with probably change your life. For most other people, especially video editors, it is probably overkill. A previous generation card will do most of what you need, at what is hopefully now a lower price. But if you need AV1 encoding or a few of the other new features, it will probably be worth it to spring for a newest generation card, just maybe not the top one in the lineup. But for those who want the absolute fastest GPU available in the world, there is no doubt, this is it. There are no downsides, it is only a matter of can you justify the price, and do you have a system that you can fit it into? But it is screaming fast, and full of new features.