Adobe MAX was held online this year, just like most other annual conferences have been in 2020. It was also offered for free, a vast departure from previous years, but Adobe has tried to preserve as much of the experience as possible in the new online form. The schedule is similar to past years, kicking off with a big keynote presentation of what Adobe’s product teams have been developing over the past year, hosted by Conan O’Brian. This was followed by segments that were less technical and more creative, profiling various artists, and their experiences and inspirations. These were grouped by fields, from photography, to graphic design, and video production, and played as a continuous stream after the keynote. As a video engineer, who doesn’t really follow art, I have hardly heard of any of these artists, who I realize are famous, just outside of my perspective. But I made sure to tune in for Jason Levine’s video focused hour, with four video focused artists. Normally at the events I attend that are more technically focused, these profiles would include details about how new technological developments are helping these artists realize their visions better than ever before, but not Adobe MAX. I saw a session about Kelli Anderson who took folding paper to a whole new level in some very impressive and functional ways, and another from director Taika Waititi sharing his irreverent take on art and the creative process. You never know what to expect from those segments, but they do help you look at things from new perspectives, which I suppose is their primary intent.

There was also the traditional MAX Sneaks presentation, where various Adobe developers show off functionality they have been working on that has not yet made its way into shipping products yet. This year we saw music-based video retiming with “on the Beat,” shared interactive AR experiences with “AR Together,” brush-based font creation with Typographic “Brushes,” and physics-based intersection prevention for 3D objects with “Physics Whiz” to name a few. None of the demo’s got me as excited as previous ones showing off the functionality that eventually led to AE’s new Rotobrush2 AI object selector.

Along with the event, comes updates in nearly every Creative Cloud program. Premiere has been getting many significant updates throughout the year, including the new Productions project organization framework, and HDR support, so the only new thing we are seeing at MAX is a set of new captioning tools, that eventually will be driven by an AI based speech to text engine. AE is getting Rotobrush 2 for AI enhanced rotoscoping, which is a significant improvement over previous methods. And AE is getting a host of new enhancements to make it easier to composite in 3D space. Character Animator is getting AI enhanced lip-sync and facial animations based on audio, as well as improvements to timeline management, and limb IK (or inverse kinematics). Photoshop probably has the most new features release at this event, with a number of AI powered improvements, with sky replacement, and neural filters, for serious AI enhancements to photographs. These photo modifying tools are counterbalanced by their Content Authenticity Initiative, which tracks how photos have been edited.

Along with the event, comes updates in nearly every Creative Cloud program. Premiere has been getting many significant updates throughout the year, including the new Productions project organization framework, and HDR support, so the only new thing we are seeing at MAX is a set of new captioning tools, that eventually will be driven by an AI based speech to text engine. AE is getting Rotobrush 2 for AI enhanced rotoscoping, which is a significant improvement over previous methods. And AE is getting a host of new enhancements to make it easier to composite in 3D space. Character Animator is getting AI enhanced lip-sync and facial animations based on audio, as well as improvements to timeline management, and limb IK (or inverse kinematics). Photoshop probably has the most new features release at this event, with a number of AI powered improvements, with sky replacement, and neural filters, for serious AI enhancements to photographs. These photo modifying tools are counterbalanced by their Content Authenticity Initiative, which tracks how photos have been edited.

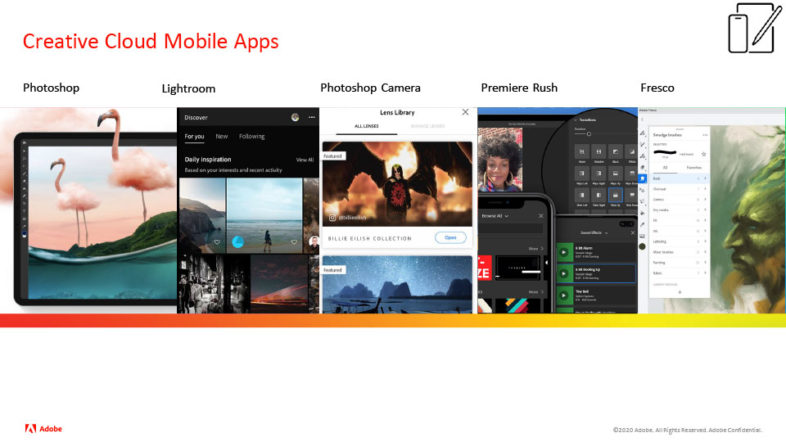

For workflows on the move, Illustrator is coming to iPad, applications can be live streamed from iPad, and Fresco is coming to iPhone. This surprised me because without a stylus, that is a small screen to do precision work with a fingertip. They also released support for Fresco on other PC devices, including my ZBook X2, back in August. This gives me support for everything iPad users can do in Creative Cloud, and so much more on my X2. But if you are an iPad user, Adobe is constantly adding more functionality to your device.

For workflows on the move, Illustrator is coming to iPad, applications can be live streamed from iPad, and Fresco is coming to iPhone. This surprised me because without a stylus, that is a small screen to do precision work with a fingertip. They also released support for Fresco on other PC devices, including my ZBook X2, back in August. This gives me support for everything iPad users can do in Creative Cloud, and so much more on my X2. But if you are an iPad user, Adobe is constantly adding more functionality to your device.

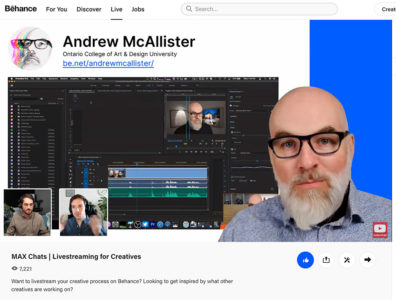

One of the other features that Adobe had previously announced, but is still in the process of weaving through its line of products, is application livestreaming. This was introduced a while back through the iPad apps, and Adobe’s timing couldn’t have been better. With the pandemic and lockdowns, there were many artists looking for new ways to express themselves, seeking ways to connect with others, and lots of people had more time than normal on their hands. So there is now a community of artists on Behance.net who livestream their artistic work, primarily in Photoshop, Illustrator, and Fresco.  When this livestreaming initiative was first announced last year, I didn’t understand it to be targeting those applications, and I was intrigued about the possibilities, the potential copyright and NDA implications of using it on video workflows and such. So I attended a session dedicated to in-application livestreaming during MAX and learned a bit more about it. Currently livestreaming is only integrated into the iPad apps, but users can stream the desktop apps to Behance through the usual tools like OBS. Desktop streaming is not allowed by default, and you have to request access through Behance. When I went to explore attempting to sign up to stream some Premiere Pro workflow ideas I have, it literally asked me if I was going to stream Photoshop or Fresco, so they are clearly targeting those apps. But Adobe has developed a really cool integration with the document history metadata in those apps, with Tool Timeline, a system of tracking exactly what tools and settings streamers are using when they are working, and the recordings can be navigated through that log. They have a plug-in for Photoshop on desktop that allows those settings and functions to be tracked the same was that the integrated streaming does in the iPad version.

When this livestreaming initiative was first announced last year, I didn’t understand it to be targeting those applications, and I was intrigued about the possibilities, the potential copyright and NDA implications of using it on video workflows and such. So I attended a session dedicated to in-application livestreaming during MAX and learned a bit more about it. Currently livestreaming is only integrated into the iPad apps, but users can stream the desktop apps to Behance through the usual tools like OBS. Desktop streaming is not allowed by default, and you have to request access through Behance. When I went to explore attempting to sign up to stream some Premiere Pro workflow ideas I have, it literally asked me if I was going to stream Photoshop or Fresco, so they are clearly targeting those apps. But Adobe has developed a really cool integration with the document history metadata in those apps, with Tool Timeline, a system of tracking exactly what tools and settings streamers are using when they are working, and the recordings can be navigated through that log. They have a plug-in for Photoshop on desktop that allows those settings and functions to be tracked the same was that the integrated streaming does in the iPad version.

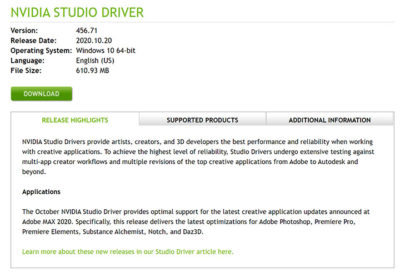

It would be interesting to see if they expand that to other apps in the future. And it would also be interesting to see an integration with GeForce Experience, which can stream to other services like Twitch and Youtube, get support for Behance in a “GeForce Creators Experience” version of that software. This would allow users to stream their entire workflow, since the primary strength of the Creative Cloud apps is in how they can all work together so seamlessly.  So streaming for an individual application would never be able to communicate an entire media workflow. Now this is how it is done currently in OBS, but a Creators variant of GeForce Experience wouldn’t constantly try to get me to install gaming drivers instead of NVidia’s Studio drivers, and let me know when a new Studio driver was released, as 456.71 was this week to coincide with MAX.

So streaming for an individual application would never be able to communicate an entire media workflow. Now this is how it is done currently in OBS, but a Creators variant of GeForce Experience wouldn’t constantly try to get me to install gaming drivers instead of NVidia’s Studio drivers, and let me know when a new Studio driver was released, as 456.71 was this week to coincide with MAX.

Also coinciding with MAX, is HP’s announcement of new displays in their top end Z-series lineup. There are 8 different displays, sized between 24 and 27 inched, that will ship early next year. They all have much thinner cabinets and are made of recycled materials. They also claim to be able limit blue light output to protect your vision, without affecting the perceived colors, via their Eye-Ease feature. This doesn’t seem like it should even be possible, because blue is an important part of the light spectrum, but concern about blue light in electronic devices has really taken off the last few years. Four of the displays offer USB-C connections, and are unique in having a built-in network interface card, giving laptop users a single connection to plug in for high res display, USB peripherals, power charging, and now, hard wired LAN support. The NIC also allows for tracking various statistics about the displays themselves, even when not connected to a host system, a useful tool for larger enterprises.

Two of HP’s new displays will be in the premium Dreamcolor line, with true 10bit color panels, and now High Dynamic Range. HDR has both video and VESA standards, and from a video perspective, computer displays run HDR10 instead of HLG, but that doesn’t necessarily mean that they are in the expected Rec2020 color-space.

Two of HP’s new displays will be in the premium Dreamcolor line, with true 10bit color panels, and now High Dynamic Range. HDR has both video and VESA standards, and from a video perspective, computer displays run HDR10 instead of HLG, but that doesn’t necessarily mean that they are in the expected Rec2020 color-space.  VESA DisplayHDR has various brightness tiers, and these will be HDR 600 certified, which is a mid-level brightness for HDR. But they are listed as supporting sRGB, DCI-P3, and Rec.709 color-spaces, so I am not clear about how that would work for people who are playing with HDR video which is in the Rec.2020 or Rec.2100 color-space. Either way, I am looking forward to trying them out when the time comes, and they should pair well with my ZBook X2, delivering it 100 Watts of power, connecting to my LAN, and giving me 10bit HDR color at UHD resolution, to match my integrated Dreamcolor display.

VESA DisplayHDR has various brightness tiers, and these will be HDR 600 certified, which is a mid-level brightness for HDR. But they are listed as supporting sRGB, DCI-P3, and Rec.709 color-spaces, so I am not clear about how that would work for people who are playing with HDR video which is in the Rec.2020 or Rec.2100 color-space. Either way, I am looking forward to trying them out when the time comes, and they should pair well with my ZBook X2, delivering it 100 Watts of power, connecting to my LAN, and giving me 10bit HDR color at UHD resolution, to match my integrated Dreamcolor display.

But MAX isn’t just about seeing what new software and hardware updates are now available. There are also breakout sessions on application design and usage, creativity, and a variety of other topics. Some of these were interactive live streams from teams of people who work with the software, while others were pre-recorded tutorials about how to use various features. Previously these were all taught live, in front of an audience of attendees. It would have been possible to recreate that real-time experience online, but like many other activities making the jump to online usage, many of these MAX sessions were pre-recorded videos. In those cases, the creator is available to chat with reviewers during the scheduled played back, but I feel something is missing from the live interaction that used to take place at the in-person conference, where we were all sitting in the room watching the presenter manipulate the software in front of us. The program is never going to crash in a pre-recorded demonstration, and the render and processing time can be cut out for efficiency, but is a less authentic representation of the software usage experience. Livestreaming has a greater risk of things going wrong, but that risk is what strengthens that connection to what or who the viewer is watching. Adobe seems to recognize the value of that real-time connection as a way to connect artists, as the basis of their application livestreaming tools, but that principle hasn’t been applied to these breakout sessions. On the other hand, I had offered to present an advanced Premiere Pro workflow course at the conference, and would have been much more comfortable doing that as a pre-recorded segment, but I have also never led one of these sessions before. So I understand the temptation to do things this way, as well as the benefits, but I just see something being lost when the sessions are pre-recorded, same as I did with GTC and other events.

Pre-recorded or not, there are all sorts of things to be learned from the sessions at MAX. Most of the video related sessions are fairly introductory, presumably by design due to MAX’s target audience, But besides watching most of the mainstream creativity sessions, I focused on learning more about After Effects, because there is always more to learn about AE, and Adobe keeps adding more to it. I use AE frequently, but usually in the same way I have for years, rarely dabbling in the new features, based on the tasks I work on. But the 3D Camera Tracker, Content-Aware Fill, and the new RotoBrush2 are all things I could use some more instruction about.

Pre-recorded or not, there are all sorts of things to be learned from the sessions at MAX. Most of the video related sessions are fairly introductory, presumably by design due to MAX’s target audience, But besides watching most of the mainstream creativity sessions, I focused on learning more about After Effects, because there is always more to learn about AE, and Adobe keeps adding more to it. I use AE frequently, but usually in the same way I have for years, rarely dabbling in the new features, based on the tasks I work on. But the 3D Camera Tracker, Content-Aware Fill, and the new RotoBrush2 are all things I could use some more instruction about.

Adobe put on an impressive event, considering it was the first time they have attempted to do MAX online, but I for one am a bit online conferenced out, and looking forward to returning to doing these things in person, or at least in real-time, instead of watching it like a TV channel. But I am looking forward to trying out the software they are showing off, and have a few new ideas I want to try out after listening to the various creative speakers.