Graphics Performance Versus Portability

As a laptop user, and a fan of graphics performance, I have always had to weigh the balance between performance and portability when selecting a system, and that usually bounces back and forth, as neither option is totally satisfactory. Systems are always too heavy or not powerful enough. My first laptop when I graduated high school was the 16″ Sony Vaio GRX570, with the largest screen available at the time, running 1600×1200 pixels. After four years carrying that around, I was eager to move to the Dell XPS M1210, the smallest laptop with a discrete GPU. That was followed by a Quadro based Dell Precision M4400 on the larger side, before bouncing to the light weight Carbon Fiber 13″ Sony Vaio Z1 in 2010, which my wife still uses. This was followed by my current Aorus X3 Plus, which has both power (GF870M) and a small form factor (13″), but at the expense of everything else.

Sony Vaio Z Series

Sony Vaio Z Series

The Vaio Z1 was the source of my first look into external graphic solutions. It was one of the first hybrid graphics solutions, to allow users to switch between different GPUs. It’s GeForce 330M was powerful enough to run Adobe’s Mercury CUDA Playback engine in CS5, but was at the limit of its performance. It didn’t support my 30″ display, and while the SSD storage solution had the throughput for 2K DPX playback, the GPU processing couldn’t keep up. Other users were upgrading the GPU with an ExpressCard based ViDock external PCIe enclosure, but a single lane of PCIe 1.0 bandwidth (2Gb/s) wasn’t enough to make is worth the effort for video editing. (3D Gaming requires less source bandwidth than video processing.) Sony’s follow-on Z2 model offered the first commercial eGPU, connected via LightPeak, the fore-runner to Thunderbolt. It allowed the ultra-light Z series laptop to utilize an AMD Radeon 6650M GPU and Blu-Ray drive in the proprietary Media Dock, presumably over a PCIe x4 1.0 (8Gb/s) connection.

Thunderbolt 3

Alienware also has a propriety eGPU solution for their laptops, but Thunderbolt is really what makes eGPUs a marketable possibility, giving direct access to the PCIe bus at x4 speeds, in a standardized connection. The first generation offered a dedicated 10Gb connection, while Thunderbolt 2 increased that to a 20Gb shared connection. The biggest thing holding back eGPUs at that point was lack of PC adoption of the “Apple” technology licensed from Intel, and OSX limitations on eGPUs. Thunderbolt 3 changed all of that, increasing the total connection bandwidth to 40Gb, the same as first generation PCIe x16 cards. And far more systems support Thunderbolt 3 than the previous iterations. Integrated OS support for GPU switching in Windows 10 and OSX (built on laptop GPU power saving technology) further pave the path to eGPU adoption.

Why eGPUs Now?

Even with all of this in my favor, I didn’t take the step into eGPU solutions until this month. I bought my personal system in 2014, just before Thunderbolt 3 hit the mainstream, and the last two systems I reviewed had Thunderbolt 3, but didn’t need eGPUs, with their mobile Quadro P4000 and P5000 internal GPUs. So i hadn’t had the opportunity to give it a go, until I received an HP Zbook Studio x360 to review. Now it’s integrated Quadro P1000 is nothing to scoff at, but there was significantly more room for performance gains from an external GPU.

Sonnet Breakaway Box

Sonnet Breakaway Box

I have had the opportunity to review the 550W version of Sonnet’s Breakaway Box PCIe enclosure over the last few weeks, allowing me to test out a number of different cards, including 4 different GPUs, as well as my Red-Rocket-X and 10GbE cards in it. Sonnet has three different eGPU enclosure options, depending on the power requirements of your GPU. They sent me the mid-level 550 model, which should support every card on the market besides AMDs power guzzling Vega64 based GPUs.  The base 350 model should support GF1080 or 2080 cards, but not overclocked, Titanium, or Titan versions. The 550 model includes two PCIe power cables, that can be used in 6 or 8 pin connectors, which should cover any existing GPU on the market, and I have cards requiring nearly every possible combo. (6pin, 8pin, both, and dual 8pin) Sonnet has a very thorough compatibility list available online, for more specific details.

The base 350 model should support GF1080 or 2080 cards, but not overclocked, Titanium, or Titan versions. The 550 model includes two PCIe power cables, that can be used in 6 or 8 pin connectors, which should cover any existing GPU on the market, and I have cards requiring nearly every possible combo. (6pin, 8pin, both, and dual 8pin) Sonnet has a very thorough compatibility list available online, for more specific details.

Installation

Installation

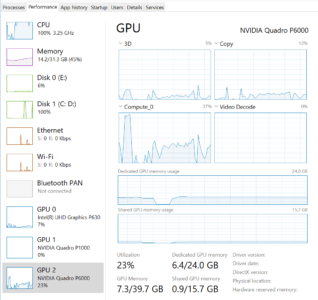

I installed my Quadro P6000 into the enclosure, because it used the same drivers as my internal Quadro P1000 GPU, and would give me the most significant performance boost. I plugged the Thunderbolt connector into the laptop while it was booted. It immediately recognized the device, but only saw it as a “Microsoft Basic Display Adapter” until I re-installed my existing 411.63 Quadro drivers and rebooted. After that, it worked great, I was able to run my benchmarks and renders without issue, and see which GPU was carrying the processing load just by looking in the task manager performance tab. (Screen shot)

Once I had finished my initial tests, safely removed the hardware in the OS, and disconnected the enclosure. I swapped the installed card with my Quadro P4000, and plugged it back into the system, without rebooting. It immediately detected it, and after a few seconds, the new P4000 was recognized, and accelerating my next set of renders. When I attempted to do the same procedure with my GeForce 2080TI, it did make me install the GeForce driver (416.16) and reboot before it would function at full capacity. (Subsequent transitions between NVidia cards were seamless)

Once I had finished my initial tests, safely removed the hardware in the OS, and disconnected the enclosure. I swapped the installed card with my Quadro P4000, and plugged it back into the system, without rebooting. It immediately detected it, and after a few seconds, the new P4000 was recognized, and accelerating my next set of renders. When I attempted to do the same procedure with my GeForce 2080TI, it did make me install the GeForce driver (416.16) and reboot before it would function at full capacity. (Subsequent transitions between NVidia cards were seamless)

The next step was to try an AMD GPU, since I have a new RadeonPro WX8200 to test, which is a Pro version of the Vega 56 architecture. I was a bit more apprehensive about this configuration due to the integrated NVidia card, and having experienced those drivers not co-existing well in the distant past. But I figured: “what’s the worst that could happen?” Initially plugging it in gave me the same Microsoft Basic Display Adapter device until I installed the RadeonPro drivers. Installing those drivers caused the system to crash, and refuse to boot. Startup repair, system restore, and OS revert all failed to run, let alone fix the issue. I was about to wipe the entire OS, and let it reinstall from the recovery partition when I came across one more idea online. I was able to get to a command line in the pre-boot environment and run a DISM (Deployment Image Servicing and Management) command to see which drivers were installed. (“Dism /image:D:\ /Get-Drivers | more”) This allowed me to see that the last three drivers oem172.inf thru oem174.inf were the only AMD related ones on the system. I was able to remove them via the same tool (“DISM /Image:D:\ /Remove-Driver /Driver:oem172.inf”) and when I restarted, the system booted up just fine. I pulled the card from the eGPU box, wiped all the AMD files from the system, and vowed never to do something like that again. Lesson of the day, don’t mix AMD and NVidia card and drivers. To AMDs credit, the WX8200 does not officially support eGPU installations, but still, extraneous drivers shouldn’t cause that much problem.

The next step was to try an AMD GPU, since I have a new RadeonPro WX8200 to test, which is a Pro version of the Vega 56 architecture. I was a bit more apprehensive about this configuration due to the integrated NVidia card, and having experienced those drivers not co-existing well in the distant past. But I figured: “what’s the worst that could happen?” Initially plugging it in gave me the same Microsoft Basic Display Adapter device until I installed the RadeonPro drivers. Installing those drivers caused the system to crash, and refuse to boot. Startup repair, system restore, and OS revert all failed to run, let alone fix the issue. I was about to wipe the entire OS, and let it reinstall from the recovery partition when I came across one more idea online. I was able to get to a command line in the pre-boot environment and run a DISM (Deployment Image Servicing and Management) command to see which drivers were installed. (“Dism /image:D:\ /Get-Drivers | more”) This allowed me to see that the last three drivers oem172.inf thru oem174.inf were the only AMD related ones on the system. I was able to remove them via the same tool (“DISM /Image:D:\ /Remove-Driver /Driver:oem172.inf”) and when I restarted, the system booted up just fine. I pulled the card from the eGPU box, wiped all the AMD files from the system, and vowed never to do something like that again. Lesson of the day, don’t mix AMD and NVidia card and drivers. To AMDs credit, the WX8200 does not officially support eGPU installations, but still, extraneous drivers shouldn’t cause that much problem.

Performance Results

Performance Results

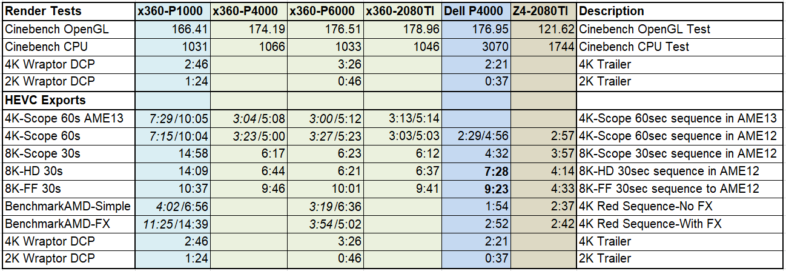

I tested Adobe Media Encoder export times with a variety of different sources and settings. Certain tests were not dramatically accelerated by the eGPU, while other renders definitely were. The main place where we see differences between the integrated P1000, and a more powerful external GPU, is when effects are applied to high res footage. That is when the GPU is really put to work, so those are the tests that improve with more GPU power. I had a 1 minute sequence of Red clips with lots of effects (Lumetri, selective blur and mosaic, all GPU FX) that took 14 minutes to render internally, and but finished in under 4 minutes with the eGPU attached. Exporting the same sequence with the effects disabled took 4 minutes internally and 3 minutes with the GPU. So the effects cost 10 minutes of render time internally, but under 1 minute of render time (35 seconds to be precise) when a powerful GPU is attached. So if you are trying to do basic cuts only editorial, an eGPU may not improve your performance much, but if you are doing VFX or color work, it can make a noticeable difference. I have a much more exhaustive spreadsheet of GPU bench-marking tests available here.

VR Headset Support

VR Headset Support

The external cards of course do increase performance in a measurable way, especially since I am using such powerful cards. But it is not just a matter of increasing render speeds, but about enabling functionality that was previously unavailable on the system. I connected up my Lenovo Explorer WMR headset to the RTX2080TI in the Breakaway Box and gave it a shot. I was able to edit 360 video in VR in Premiere Pro, which is not supported on the included Quadro P1000 card. I did experience some interesting ghosting on occasion, where if I didn’t move my head everything looked perfect, but movement caused a double image, as if one eye was a frame behind the other. But the double image was appearing in each eye, as if there was an excessive motion blur applied to the rendered frames. I thought it might be a delay based on extra latency in the Thunderbolt bus, but other times the picture looked crisp regardless of how quickly I moved my head. So it can work great, but there may need to be a few adjustments made to smooth things out. Lots of other users online report it working just fine, so there is probably a solution available out there for that issue.

Full Resolution 8K Tests

Full Resolution 8K Tests

I was able to connect my Dell 8K display to the card as well, and while the x360 happens to support that display already (DP1.3 over Thunderbolt), most notebooks do not. (And it increased the refresh rate from 30hz to the full 60hz.) I was able to watch HEVC videos smoothly at 8K in Windows, and was able to playback 8K DNxHR files back in Premiere at full res, as long as there were no edits or effects. Just playing back footage at full 8K taxed the 2080TI at 80% compute utilization. But this is 8K we are talking about, playing back on a laptop, at full resolution. 4K anamorphic and 6K Venice X-OCN footage played back smoothly at half res in Premiere, and 8K Red footage played back at quarter. This is not the optimal solution for editing 8K footage, but it should have no problem doing serious work at UHD and 4K. And if you want to use your Dell UP3218K display with a laptop, I highly recommend an eGPU to drive it smoothly.

Other Cards and Functionality

Other Cards and Functionality

GPUs aren’t the only PCIe cards that can be installed in the Breakaway Box, so I can add a variety of other functionality to my laptop if desired. Thunderbolt array controllers minimize the need for SATA or SAS cards in enclosures, but that is a possibility. I installed an Intel X520-DA2 10GbE card into the box, and was copying files from my network at 700MB/s within a minute, without even having to install any new drivers. But unless you need to have SFP ports, most people looking for 10GbE functionality would be better served to look into Sonnet’s Solo 10G for smaller form factor, lower power use, and cheaper price. There are a variety of other options for Thunderbolt 3 to 10GbE hitting the market as well.

The Red-Rocket-X card has been a popular option for external PCIe enclosures over the last few years, primarily for onset media transcoding. I installed mine in the Breakaway Box to give that functionality a shot as well. I ran into two issues, both of which I was able to overcome, but are worth noting. First off, the 6 pin power connector is challenging to fit into the poorly designed Rocket power port, due to the retention mechanism being offset for 8pin compatibility. But it can fit if you work at it a bit, although I prefer to keep a 6pin extension cable plugged into my Rocket since I move it around so much. Once I had all of the hardware hooked up, it was recognized in the OS, but installing the drivers from Red resulted in a Code-52 error that is usually associated with USB devices. But the recommended solution online was to disable Windows 10 driver signing, in the pre-boot environment, and that did the trick. (My theory is that my HP’s SureStart security functionality was hesitating to give direct memory access to an external device, as that is the level of access Thunderbolt devices get to your system, and the Red Rocket-X driver wasn’t signed for that level of security.) Anyhow, the card worked fine after that, and I verified that it accelerated my renders in Premiere Pro and AME. I am looking forward to a day when CUDA acceleration allows me to get that functionality out of my underutilized GPU power instead of requiring a dedicated card.

I did experience an issue with the Quadro P4000, where the fans spun up to 100% when the laptop went to shut off, hibernated, or went to sleep. None of the other cards had that issue, instead they shut off when the host system did, and turned back on automatically when I booted up the system. I have no idea why the P4000 acted differently than the architecturally very similar P6000. Manually turning off the Breakaway Box or disconnecting the Thunderbolt cable solves the problem with the P4000, but then you have to remember to turn reconnect again when you are booting up. In the process of troubleshooting the fan issue, I did a few other driver installs, and learned a few tricks. First off, I already knew Quadro drivers can’t run GeForce cards (otherwise why pay for a Quadro) but GeForce drivers can run on Quadro cards. So it makes sense you will want to install GeForce drivers when mixing both types of GOUs. But I didn’t realize that apparently GeForce drivers take preference when they are installed. So when I had an issue with the internal Quadro card, reinstalling the Quadro drivers had no effect, since the GeForce drivers were running the hardware. Removing them (with DDU just to be thorough) solved the issue, and got everything operating seamlessly again. Sonnet’s support people were able to send me the solution to the problem on the first try. That was a bit of a hiccup, but once it was solved I could again swap between different GPUs without even rebooting. And most users will always have the same card installed when they connect their eGPU, further simplifying the issue.

I did experience an issue with the Quadro P4000, where the fans spun up to 100% when the laptop went to shut off, hibernated, or went to sleep. None of the other cards had that issue, instead they shut off when the host system did, and turned back on automatically when I booted up the system. I have no idea why the P4000 acted differently than the architecturally very similar P6000. Manually turning off the Breakaway Box or disconnecting the Thunderbolt cable solves the problem with the P4000, but then you have to remember to turn reconnect again when you are booting up. In the process of troubleshooting the fan issue, I did a few other driver installs, and learned a few tricks. First off, I already knew Quadro drivers can’t run GeForce cards (otherwise why pay for a Quadro) but GeForce drivers can run on Quadro cards. So it makes sense you will want to install GeForce drivers when mixing both types of GOUs. But I didn’t realize that apparently GeForce drivers take preference when they are installed. So when I had an issue with the internal Quadro card, reinstalling the Quadro drivers had no effect, since the GeForce drivers were running the hardware. Removing them (with DDU just to be thorough) solved the issue, and got everything operating seamlessly again. Sonnet’s support people were able to send me the solution to the problem on the first try. That was a bit of a hiccup, but once it was solved I could again swap between different GPUs without even rebooting. And most users will always have the same card installed when they connect their eGPU, further simplifying the issue.

Do you need an eGPU?

I really like this unit, and I think that eGPU functionality in general will totally change the high end laptop market for the better. For people who only need high performance at their desk, there will be a class of top end laptop with high end CPU, RAM, and Storage, but no GPU to save on space and weight. (CPU can’t be improved by external box, and needs to keep up with GPU) There will be another similar class with mid-level GPUs to support basic 3D work on the road, but massive increases at home. I fall in the second category, as I can’t forego all GPU acceleration when I am traveling, or even walking around the office. But I don’t need to be carrying around an 8K rendering beast all the time either. I can limit my gaming, VR work, and heavy renders to my desk. That is the configuration I have been able to use with this ZBook x360, enough power to edit un-tethered, but combining the internal 6-Core CPU with a top end external GPU gives great performance when attached to the Breakaway Box. As always, I still want to go smaller, and plan to test with an even lighter weight laptop as soon as the opportunity arises.

The Breakaway Box is a simple solution to a significant issue. No bells and whistles, which I initially appreciated. But the eGPU box is inherently a docking station, so there is an argument to be made for adding other functionality. Due to the x360’s lack of Ethernet connectivity, I wish that was included, and other options include support for USB, SATA, etc. But in theory those other options are sharing your Thunderbolt bus bandwidth, so simple might also lead to maximum performance. In my case, once I am setup at my next project, using a 10GbE adapter in the second TB3 port on my laptop will be a better solution for top performance and bandwidth anyway. So I am excited about the possibilities that eGPUs bring to the table, now that they are fully supported by the OS and applications I use, and I don’t imagine buying a laptop setup without one anytime in the foreseeable future. The Sonnet Breakaway Box meets my needs in that regard, and has performed very well for me over the last few weeks.

Hi Mike,

according to your experiments, is it possible to drive the Dell UP3218K at full 8K with 60 Hz refresh rate

from the HP Zbook Studio x360, connecting with two cables both the Thunderbolt outputs to the monitor?

(I would use an HP Zbook Studio x360 with a Quadro P2000 graphic card).

The Zbook Studio manual says: “Supports DisplayPort 1.3 (supported through Thunderbolt 3); DisplayPort 1.4 ready”

Thanks,

Luca

No, I used a single Thunderbolt cable to the Thunderbolt docking station, which has two full sized DisplayPort outputs. Or I used a single Thunderbolt cable to the Sonnet Breakaway Box, with a Quadro GPU in it with multiple DP outputs. It may be possible to run the 8K display directly from the DP1.3 ports on the laptop, but I have never had a USB-C to DP cable, let alone two to test that idea with. That kind of makes me want to test it with the dual TB3 ports on my ZBook X2. I probably won’t be able to do that test for a few months though, as I am traveling,