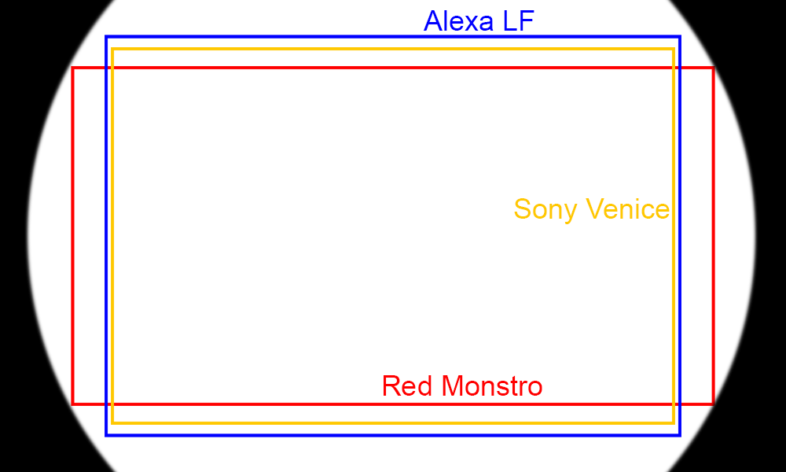

In the last few months, we have seen the release of the Red Monstro, Sony Venice, Arri Alexa LF, and Canon C700 FF, all of which have larger, or full frame sensors. Full Frame refers to the DSLR terminology, with full frame being equivalent to the entire 35mm film area, the way that it was used horizontally in still cameras, and full frame was as opposed to smaller crop sensors in the cheaper DSLRs. All SLRs used to be full frame with 35mm film, so there was no need for the term until manufacturers started saving money on digital image sensors by making them smaller than 35mm film exposures. Super35mm motion picture cameras on the other hand ran the film vertically, resulting in a smaller exposure area per frame. But this was still much larger than most video imagers until the last decade, with 2/3rd inch chips being considered premium imagers. The options have grown a lot since then.

Most of the top end cinema cameras released over the last few years have advertised their Super35mm sensors as a huge selling point, as that allows use of any existing S35 lens on the camera. These S35 cameras include the Epic, Helium, & Gemini from Red, Sony’s F5 & F55, Panasonic VaricamLT, Arri Alexa, and Canon C100-500. And on the top end, 65mm cameras like the Alexa65 have sensors twice as wide as Super35 cameras, but very limited lens options to cover a sensor that large. Full Frame falls somewhere in between, and allows, among other things, use of any 35mm still film lenses. In the world of film, this was referred to as Vista Vision, but the first widely used full frame digital video camera was Canon’s 5D MkII, the first serious HDSLR. That format has suddenly surged in popularity recently, so I had the opportunity to be involved in a test shoot with a number of these new cameras. Keslow Camera was generous enough to give DP Michael Svitak and myself access to pretty much all their full frame cameras and lenses for the day, to test the cameras, workflows, and lens options for this new format. We also had the assistance of 1st AC Ben Brady, to help us put all that gear to use, and Michael’s daughter Florendia as our model.

First off is the Red Monstro, which while technically not the full 24mm height of true full frame, uses the same size lenses due to the width of its 17×9 sensor. It offers the highest resolution of the group, at 8K. It records compressed RAW to R3D files, as well as options for ProRes and DNxHR up to 4K, all saved to Red Mags. Like the rest of the group, smaller portions of the sensor can be utilized at lower resolution, to pair with smaller lenses. The Red Helium sensor has the same resolution, but in a much smaller Super35 size, allowing a wider selection of lenses to be used. But larger pixels allow more light sensitivity, with individual pixels up to 5 microns wide on the Monstro and Dragon, compared to Helium’s 3.65micron pixels.

First off is the Red Monstro, which while technically not the full 24mm height of true full frame, uses the same size lenses due to the width of its 17×9 sensor. It offers the highest resolution of the group, at 8K. It records compressed RAW to R3D files, as well as options for ProRes and DNxHR up to 4K, all saved to Red Mags. Like the rest of the group, smaller portions of the sensor can be utilized at lower resolution, to pair with smaller lenses. The Red Helium sensor has the same resolution, but in a much smaller Super35 size, allowing a wider selection of lenses to be used. But larger pixels allow more light sensitivity, with individual pixels up to 5 microns wide on the Monstro and Dragon, compared to Helium’s 3.65micron pixels.

Secondly we have Sony’s new Venice camera, with a 6K Full Frame sensor, allowing 4K S35 recording as well. It records XAVC to SxS cards, or compressed RAW in the X-OCN format with the optional ASX-R7 external recorder, which we used. It is worth noting that both full frame recording, and integrated anamorphic support require additional special licenses from Sony, but Keslow provided us with a camera that had all of that functionality enabled. With a 36x24mm 6K sensor, the pixels are 5.9microns, and footage shot at 4K in the S35 mode should be similar to shooting with the F55.

Secondly we have Sony’s new Venice camera, with a 6K Full Frame sensor, allowing 4K S35 recording as well. It records XAVC to SxS cards, or compressed RAW in the X-OCN format with the optional ASX-R7 external recorder, which we used. It is worth noting that both full frame recording, and integrated anamorphic support require additional special licenses from Sony, but Keslow provided us with a camera that had all of that functionality enabled. With a 36x24mm 6K sensor, the pixels are 5.9microns, and footage shot at 4K in the S35 mode should be similar to shooting with the F55.

Lastly, we unexpectedly had the opportunity to shoot on Arri’s new AlexaLF (Large Format) camera. At 4.5K, this had the lowest resolution, but that also means the largest sensor pixels, at 8.25microns, which can increase sensitivity. It records ArriRAW or ProRes to Codex XR Capture Drives with its integrated recorder.

Lastly, we unexpectedly had the opportunity to shoot on Arri’s new AlexaLF (Large Format) camera. At 4.5K, this had the lowest resolution, but that also means the largest sensor pixels, at 8.25microns, which can increase sensitivity. It records ArriRAW or ProRes to Codex XR Capture Drives with its integrated recorder.

The other new option is the Canon C700 FF, with a 5.9K Full Frame sensor recording RAW, ProRes, or XAVC to CFast cards or Codex Drives. That gives it 6micron pixels, similar to the Sony Venice. But we did not have the opportunity to test that camera this time around, maybe in the future.

One more factor in all of this is the rising popularity of anamorphic lenses. All of these cameras support modes that utilize the part of the sensor covered by anamorphic lenses, and can desqueeze the image for live monitoring and preview. In the digital world, anamorphic essentially cuts your overall resolution in half, until the unlikely event that we start seeing anamorphic projectors, or cameras with rectangular sensor pixels. But the prevailing attitude appears to be: we have lots of extra resolution available, it doesn’t really matter if we lose some to anamorphic conversion.

One more factor in all of this is the rising popularity of anamorphic lenses. All of these cameras support modes that utilize the part of the sensor covered by anamorphic lenses, and can desqueeze the image for live monitoring and preview. In the digital world, anamorphic essentially cuts your overall resolution in half, until the unlikely event that we start seeing anamorphic projectors, or cameras with rectangular sensor pixels. But the prevailing attitude appears to be: we have lots of extra resolution available, it doesn’t really matter if we lose some to anamorphic conversion.

So what does all of this mean for post production? In theory, sensor size has no direct effect on the recorded files (besides the content of them), while resolution does. But we also have a number of new formats to deal with as well. And then we have to deal with anamorphic images during finishing.

So what does all of this mean for post production? In theory, sensor size has no direct effect on the recorded files (besides the content of them), while resolution does. But we also have a number of new formats to deal with as well. And then we have to deal with anamorphic images during finishing.

Ever since I got my hands on one of Dell’s new UP3218K monitors with an 8K screen, I have been collecting 8K assets to display on there. When I first started discussing this shoot with DP Michael Svitak, I was primarily interested in getting some more 8K footage to use to test out new 8K monitors, editing systems and software as they got released. I was anticipating getting Red footage, which I knew I could playback and process using my exist software and hardware. The other cameras and lens options were added as the plan expanded, and by the time we got to Keslow Camera, they had filled a room with lenses and gear for us to test with. I also had a Dell 8K display connected to my ingest system, and the new 4K Dreamcolor monitor as well. This allowed me to view the recorded footage in the highest resolution possible.

Ever since I got my hands on one of Dell’s new UP3218K monitors with an 8K screen, I have been collecting 8K assets to display on there. When I first started discussing this shoot with DP Michael Svitak, I was primarily interested in getting some more 8K footage to use to test out new 8K monitors, editing systems and software as they got released. I was anticipating getting Red footage, which I knew I could playback and process using my exist software and hardware. The other cameras and lens options were added as the plan expanded, and by the time we got to Keslow Camera, they had filled a room with lenses and gear for us to test with. I also had a Dell 8K display connected to my ingest system, and the new 4K Dreamcolor monitor as well. This allowed me to view the recorded footage in the highest resolution possible.

Most editing programs, including Premiere Pro and Resolve can handle anamorphic footage without issue, but new camera formats can be a bigger challenge. Any RAW file requires info about the sensor pattern in order to debayer it properly, and new compression formats are even more work. Sony’s new compressed RAW format for Venice, called X-OCN, is supported in the newest 12.1 release of Premiere Pro, so I didn’t expect that to be a problem, and its other recording option is XAVC, which should work as well. The Alexa on the other hand uses .arri raw files, which have been supported in Premiere for years, but each new camera shoots a slightly different “flavor” of the file, based on the unique properties of that sensor. Shooting ProRes instead would virtually guarantee compatibility, at the expense of the RAW properties. (Maybe someday ProResRAW will offer the best of both worlds.) The Alexa also has the challenge of recording to Codex drives, that can only be offloaded in OSX or Linux.

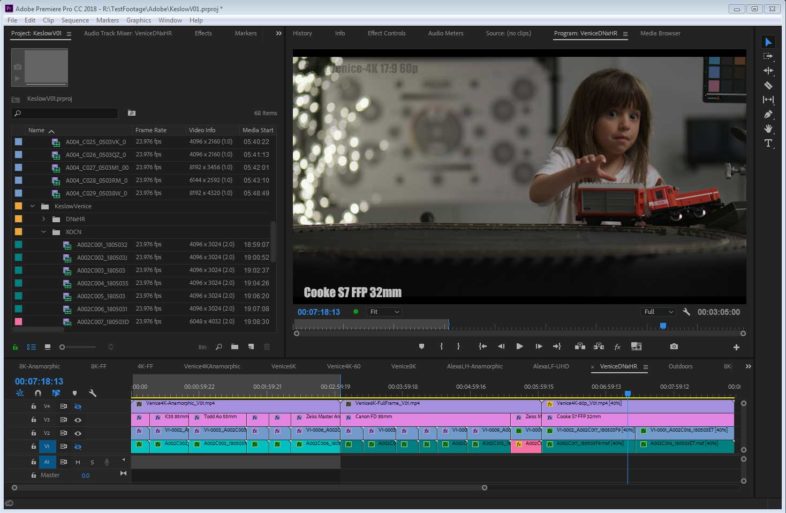

Once I had all of the files on my system, after using a MacbookPro to offload the media cards, I tried to bring them into Premiere. The Red files came in just fine, but don’t play back smoothly over 1/4 resolution. They play smoothly in RedcineX with my Red Rocket-X enabled, and they export respectably fast in AME, (5 minute 8K anamorphic sequence to UHD H.265 in 10 minutes) but for some reason Premiere Pro isn’t able to get smooth playback when using the Red Rocket-X. Next I tried the X-OCN files from the Venice camera, which imported without issue, and they played smoothly on my machine, but looked like they were locked to half or quarter res, regardless of what settings I used, even in the exports. I am currently working with Adobe to get to the bottom of that, because they are able to play back my files at full quality, while all my systems have the same issue. Lastly, I tried to import the .ARRI files from the AlexaLF, but Adobe doesn’t support that new variation of ArriRaw yet. I would anticipate that will happen soon, since it shouldn’t be too difficult to add that new version to the existing support.

Once I had all of the files on my system, after using a MacbookPro to offload the media cards, I tried to bring them into Premiere. The Red files came in just fine, but don’t play back smoothly over 1/4 resolution. They play smoothly in RedcineX with my Red Rocket-X enabled, and they export respectably fast in AME, (5 minute 8K anamorphic sequence to UHD H.265 in 10 minutes) but for some reason Premiere Pro isn’t able to get smooth playback when using the Red Rocket-X. Next I tried the X-OCN files from the Venice camera, which imported without issue, and they played smoothly on my machine, but looked like they were locked to half or quarter res, regardless of what settings I used, even in the exports. I am currently working with Adobe to get to the bottom of that, because they are able to play back my files at full quality, while all my systems have the same issue. Lastly, I tried to import the .ARRI files from the AlexaLF, but Adobe doesn’t support that new variation of ArriRaw yet. I would anticipate that will happen soon, since it shouldn’t be too difficult to add that new version to the existing support.

I ended up converting the files I needed to DNxHR in DaVinci Resolve, in order to edit them in Premiere, and put together a short video showing off the various lenses we tested with. Eventually I need to learn how to use Resolve more efficiently, but the type of work I usually do lends itself to the way Premiere is designed. (Inter-cutting and nesting sequences with many different resolutions and aspect ratios) Here is a short clip demonstrating some of the lenses we tested with:

This is a web video, so even at UHD, it is not meant to be an analysis of the RAW image quality, but a demonstration of the field of view and overall feel with various lenses and camera settings. The combination of the larger sensors, and the anamorphic lenses, leads to an extremely wide field of view. The table was only about 10′ from the camera, and we can usually see all the way around it. We also discovered that, at least when recording anamorphic on the Alexa LF, we were recording a wider image than was displaying on the monitor output. You can see in the frame grab below, that the live display visible on the right side of the image isn’t displaying the full content that got recorded, which is why we didn’t notice that we were recording with the wrong settings, with so much vignetting from the lens.

We only discovered this after the fact, from this shot, so we didn’t get the opportunity to track down the issue, to see if it was the result of a setting in the camera, or in the monitor. This is why we test things before a shoot, but we didn’t “test” before our camera test, so these things happen. But we learned a lot from the process, and hopefully some of those lessons are conveyed here. A big thanks to Brad Wilson, and the rest of the guys at Keslow Camera for their gear and support of this adventure, and hopefully it will help people better prepare to shoot and post with this new generation of cameras.